Golden Master (GM)

Phase: Release

Purpose: The Golden Master is necessary in order for a First Article to be created. The purpose is to ship the software to the customers.

Entry Criteria: QA should receive the Final Candidate burned on to a CD.

Activities

Apart from all the testing activities done during FC phase, a few additional activities are done which are as follows:

• Test the CD for Viruses.

• Test the CD for block/sectors quality.

• Verify CD structure.

• Verify that all the files are being installed at the proper location.

• Verify that all the files appear with correct extension and icons on respective platforms.

For more software testing definitions, please go here

Final Candidate

Phase: Final Candidate

Purpose: Ship the software to the customers.

Entry Criteria

• All Showstopper, Alpha, Beta and FC defects thrashed till specified number of days prior to the publication of the build must be fixed.

• All defects fixed in the prior build of FC candidate should have appropriate action taken on them.

• All pre-release customers reported defects thrashed till specified number of days prior to the publication of the build must be fixed.

• The user documentation except for known issues and issues resolved must be reviewed and signed off by Legal, PM, R &D and QA.

• Configuration Documents are defined and reviewed by the stakeholders prior to the publication of the build.

• All test plans and DITs must be updated on iport with all the execution results.

• QA must be able to replicate the documented customer workflow on the candidate build.

• All 3rd party licenses are reviewed and approved by Legal.

• QA has verified that all legal requirements have been met.

• Product has final icons, splash screens and other artwork.

• All localization and UI defects should be fixed and localizable resources frozen in the weekly build prior to the FC build.

•The MTBF (Mean Time Between failure) of the application should be more than specified number of hours.

•The Final candidate build delivered to QA should be installable by the final product installation process from CD.

Activities

• Acceptance according to following criteria:

• Successful execution of Build Acceptance tests.

• Acceptance results shall be published within 1 working day after receiving the Final

Candidate build.

• Regress all OpenForQA bugs fixed for the Accepted Final Candidate build after publishing the

Acceptance test results.

• Regress all Fixed/NLI defects.

• Execute all the identified workflows.

• Execute one round of structured tests and update the results on iport.

• QA must execute Shortcut Key tests on the candidate build.

• Localized testing must be done on the candidate build.

• Performance testing is to be done on candidate build.

Exit Criteria

• The Activities described above are completed.

• If any new defect is logged or existing defect is found to reoccur, it needs to be approved by the Triage team with the justification or the build cannot be certified as GM.

• Documented Training Tutorials are complete.

• All deliverables like Sales Kit, Printed Manuals, Boxes, BOM, Mercury Setup, QLA and XDK should be ready for distribution.

• Older validation codes do not work. Installer has no built in time bomb.

• Build is published on product server.

For more software testing definitions, please go here

First Article

Phase: Release

Purpose: Ship software to Customers

Entry Criteria

• GM is approved.

• QA requests SCM/WPSI to co-ordinate for the First Article process.

Activities

• Check the Rimage text.

• Perform Installer testing.

• Test the CD for Viruses.

• Test the CD for block/sectors quality.

• Verify CD structure.

• Verify that all the files are being installed at the proper location.

• Verify that all the files appear with correct extension and icons on respective platforms.

• Check for correct splash screen.

• Perform Acceptance testing.

Exit Criteria

• The above activities are completed.

• First Article approved as identical to GM.

For more software testing definitions, please go here

EggPlant

Eggplant runs on Mac OS system called “Client”, while the Application Under Test (AUT) runs on a separate computer, called “System-Under-Test” (SUT). Eggplant interacts with the SUT via a network, using Virtual Network Computer (VNC) server running on the SUT.

EggPlant uses an Object Oriented scripting language called SenseTalk. Basic concepts in SenseTalk include Values, Containers, Expressions and Control Structures.

Automated Testing Methodology in EggPlant

Most of the Eggplant activity takes place in four types of windows:

• Remote Screen Window - The Remote Screen appears in an Eggplant window on client Mac. User can see and interact with software running on the SUT via the Remote Screen.

• Script Editor Windows - The Script Editor lets the user create and edit scripts. Scripts test the software by simulating user actions with a list of scripted steps.

• Run Window - The Run window shows a variety of levels of detail about the currently running script or scripts.

• Suite Editor Windows - A Suite is a collection of testing elements. With Suite Editor, user can manage these elements including scripts, images, results, schedules and helpers.

Framework

The commonly used framework comprises of the following files:

Frame – Common library containing commonly used functions and images. In addition, it also contains the Recovery system used by EggPlant.

To know about Automation Script, Please go Automation Scripts

For more software testing definitions, please go here

Engineering Complete

Pre-EC is a phase, when some of the features have reached an EC status within a component.

Phase: Engineering

Purpose: Implement all features as specified in Software Requirements Specification.

Entry Criteria

•The requirements completely and accurately define the functionality that was intended to be developed.

• The code-level design of the feature is a complete and accurate reflection of the functionality defined in the requirements.

• The user interface of the software is an accurate and complete representation of what is represented in the user interface specification (UIS).

• The functionality is implemented on all supported operating systems as well as all flavors of the application.

• QA has developed the test plan as per SRS.

Activities

• Once the entry criteria have been met, R&D should communicate to the team, at multiple stages in the development of the component, that requirements have reached an EC status.

• R&D ensures the requirements can be demonstrated at GUI layer using an SCM compiled build, thus ensuring there are no integration issues in the software.

• When declaring requirements have reached an EC status, R&D should communicate the following to the team:

• Build number used to evaluate requirements (daily or weekly build).

• Platform used (Mac or Win).

• Requirement numbers that were tested by R&D.

• Once EC is declared by R&D, an EC review meeting should be held involving all the stakeholders, in which the following activities will occur:

• Software is evaluated against each requirement listed in the SRS.

• Software architecture is evaluated against the design documents.

• Once the EC review meeting is completed, a component EC summary should be sent to the component team, Managers/Directors of QA, PM, and R &D.

• Test plans should be reviewed by R&D and PM.

• Initial Risk Analysis should be generated from customers, developers and testing perspective.

Exit Criteria

• The Activities described above are completed.

• QA and PM sign-off that there are no missing features.

• Test plans are approved by R&D and PM.

• Installers are provided for all future builds.

For more software testing definitions, please go here

Defect Tracking

After encountering what appears to be a defect, the first step is to replicate it.

• Repeat the steps leading to the problem. Also try quitting and re-launching, or rebooting, and then repeating the steps.

• Always start from a known state (e.g. launch the application).

• After you have a repeatable sequence of steps that replicate the problem, try to narrow it down.

• Try to eliminate steps that are not required to reproduce the problem.

• Eliminate dependencies on input methods (e.g. keyboard shortcuts vs. mouse actions).

• Replicate it on a second machine.

• Determine if it is platform dependent or a cross platform issue.

• Determine if this is a general problem or specific to this feature.

• Determine if this problem is file dependent or can be replicated in a new file.

If it is not obvious that it is a bug (ambiguous feature behavior), as opposed to a system failure or a graphic output problem, check the Functional Requirements or Use Cases. If the behavior is still unclear, talk to the QA Manager or Supervisor or other team members or the developer responsible for the feature.

It is also helpful to determine when the bug was introduced. This can help the developers determine if recent changes made in an associated area created a new bug.

For more software testing definitions, please go here

Defect Thrashing Procedure

The setting of priority of the defect is an automatic process. The QA Engineer responsible for the defect thrashing has to answer the following questions.

• What is the impact on customer workflow?

• Does this Bug stop us from Testing?

• What is the probability of occurrence or occurring in workflow of customer?

• What is the origin of the defect?

• What is the impact of the defect on other related modules?

Depending upon the answers provided to the above questions by the QA Engineer, the system automatically assigns a priority to the defect.

For more software testing definitions, please go here

Defect Logging

• See if it is known. Check the bug database to see if the bug you have found has already been reported.

• If the defect you have found is a duplicate but the one in the database has a status of Closed, reopen it and enter a comment in the history.

• If the bug you have just found is similar to a bug which is already in the database, but not exactly the same, then the existing report may need to be modified. Add your comments to the bug.

• If it isn't a duplicate, write it up after isolating it.

Writing up Problem Reports

In general, the Problem Title and Steps to recreate should be very specific and not contain editorial comments or opinion. With the exception of Enhancements, these fields should describe "what you did", "what object you did it to" and "what happened". The object needs to be referred to by its real name. Likewise, correct OS terminology must be used as well. Using the correct terminology will help others find your bug and reduce the number of duplicate bugs.

Given below is the format of how to log a clear and concise defect

/*****************************************************/

Problem Title:

Product name:

Build Tested:

Origin:

O.S. Tested:

O.S. Affected:

Tested in previous version of the product (if applicable):

Affected:

Browser Tested :<>

Browser Affected :<>

(Applicable only to web related defects)

_______________________________________________________________________

Steps to Recreate:

1.

2.

3.

Result:

Expected Result:

What works vs what does not:

Note

/*****************************************************/

Description of the main fields is given below:

Problem Title:

This is a one sentence summary that describes the bug. The summary should be concise, and include any special circumstances or exceptions. The Problem Title should be so accurate that someone associated with the project should be able to understand and even reproduce the problem from the problem title field alone.

Steps to Recreate:

This is a sequence of steps that describe the problem so that anyone can replicate the problem. Descriptions should be as concise as possible and should really be no more than 10 steps. The result needs to be written down separately. The steps should only describe the incorrect behavior. There is a tendency to write an example of similar correct behavior first and then the incorrect behavior to help justify the bug. This only confuses and frustrates the developer.

Result:

Description of the incorrect behavior, including specific file errors with stack crawls, asserts & user breaks.

Expected Result:

Description of what the specification defines or (if undefined) your expectations. If the expected behavior is at all at question, it probably needs to be escalated to management for definition.

What works what does not work:

a) Should contain what works and what does not (mandatory for almost all defects, few exceptional cases may be there!).

b) Special notes describing the defect, which is helpful for R&D to fix the defect (optional).

c) Related defect Ids. (Optional).

Note:

This is additional information that assists the developer in understanding the bug. This could be the version where it was introduced, things that you discovered while narrowing the bug down, circumstances where the bug doesn't occur, and example of the correct behavior elsewhere in the product, etc.

Proofread your bug reports and try to reproduce the problems following the steps exactly as written.

Visit Defect Thrashing Procedure and Defect Tracking as well for more information

Concurrent Versions System (CVS)

• It allows developers to access their code from anywhere with an Internet connection

• It maintains a history of changes made to all files in the project directory

• It allows users to have an automatic backup of all their work and the ability to rollback to previous versions, if need be

• CVS is run on a central server for all team members to access. Team members can simply checkout code, and then check it back in when done

The process of updating a file to the database consists of three steps:

• Get current version of file from database

• Merge any changes between the database version and the local version

• Commit the file back to the database

Two pieces of software are required to setup CVS:

• Server

• Client

The server handles the database end and the client handles the local side.

Tracking Changes

The standard tool used for tracking changes is “diff”.

Authentication

The user needs to authenticate to the CVS server. Following information needs to be specified:

• CVS server

• Directory on that server which has the CVS files

• Username

• Authentication method

The combination of all of these is sometimes called the “CVSROOT”

For more software testing definitions, please go here

Communicating with various Departments

Research and Development (R&D)

• Effective bug reports.

• Tips for Regression during bug fixing.

• Complex Areas of the code.

• Affected areas after Code Optimization.

• Implementation schedule of features.

• Components with maximum code changes.

• Unit Testing of implemented features.

• Feedback on Test planning.

• Components with high defect rejection.

Product Management (PM)

• Use cases for the features.

• Customer Workflow and frequency of usage.

• Feedback on the Quality of the component.

• Feedback on Usability of the components.

• Feedback on Test planning.

Technical Support

• Type and frequency of queries.

• Issues reported and workarounds given for the components.

• Overall feedback for a Product.

• Integration of a particular feature with other features.

• Expectations of the customer from our Products.

• Third Party XTensions used by the customers.

Customers

Email Communication:

Do’s

• Always start the email with a friendly salutation.

• Run a spell check on the emails before sending them to the customer.

• Re-read the email before sending it to ensure that the email conveys the right message and does not sound too cold or rude.

• Include confidentiality notice in your e-mails.

• Keep the MDA in CC and the customer in the TO list while replying.

• If the customer is not responding write to the respective MDA.

Don’ts

• Avoid writing emails with unclear subject lines.

• If unsure about the customer’s gender, refrain from using Mr/Mrs.

• Don’t use fancy fonts and background images in the email.

• Don’t forward internal mails/communication to the customer.

• Don’t commit anything regarding bug fixes and feature enhancements.

• Don’t use abbreviations which are not commonly understood.

• Don’t write emails in upper case.

Phone Conversations:

Do’s

• Speak in a slow and clear tone.

• Greet the customer when starting a phone conversation or closing it.

Don’ts

• Don’t interrupt the customer in between.

• Don’t show anger, resentment in your tone.

For more software testing definitions, please go here

Beta Certification

Phase: Beta

Purpose: Present product to external customers for environmental testing including use of intended configuration, hardware, workflow, network etc.

Entry Criteria:

• All Showstoppers, Alpha and Beta defects thrashed till specified number of days prior to the publication of the build must be fixed.

• Defects which are dependent on external implementation must have possible fix dates (before the FC date). All exceptions are in escalation with senior management.

• All Defects fixed in the prior build of Beta candidate should have appropriate action taken on them.

• All pre-release customer reported defects (Showstopper, Alpha and Beta) thrashed till specified number of days prior to publication of the build must be fixed.

• All the Gray Area tasks identified by R&D prior to Alpha build must be completed before Beta build is delivered to QA.

• Results of Performance tests are available and all Showstopper, Alpha and Beta defects have been resolved.

• QA must complete one round of site visits for predefined customers.

• QA must be able to replicate the documented customer workflow on the beta build.

• All exceptions must be approved by competent authority.

• UI specification is complete and signed off. Pixel perfect reviews are finished and all identified issues are resolved.

• Customer pre-release documentation (What to test, Known Issues, New features list, test Documents) is complete.

• The help files must be part of the Beta candidate build and must be launched from the application.

• All other documentation reviewed and final drafts must be installed by the installers. The structure of the installed build must be as per the configuration document reviewed by the stake holders.

• All test Plans and DITs reviewed and approved by R&D and PM and the test wares are updated on shared location.

• All functional requirements from PM and design doc from R&D should be up-to-date and approved.

• The MTBF (Mean Time Between failure) of the application should be more than specified number of hours.

• The UI implementation is complete in all respects.

• All localization changes (Application and XTensions) should have been completed.

• File Format should be frozen i.e. any file saved in the Beta build or later can be opened without data loss.

Activities

• Acceptance testing by QA according to following criteria:

• Successful execution of Build Acceptance tests i.e. Smoke test and Manual test.

• Acceptance results shall be published within 1 working day after receiving the Beta Build.

• Regress all OpenForQA bugs fixed for the Accepted Beta build after publishing the Acceptance test results.

• Regress all Fixed/NLI Showstopper, Alpha and Beta defects.

• Execute all the identified workflows.

• Execute one round of Structured tests and update the results on Shared location.

• Gather, document and react to the feedback from external sources.

Exit Criteria:

• The above testing methods are completed.

• If any of Showstopper, Alpha or Beta defect is rejected, it needs to be approved by PM as an exception.

• Beta build distributed to testers, including external customers.

For more software testing definitions, please go here

Automation Scripts

Contents

• File Summary: Summary of the file – Functionality name, Creation date, and scripter

• Script Data types and Variables

• Script Functions: Commonly used functions in the script

• Positive Test scripts: All positive test cases according to the Test Case Document

• Negative Test scripts: All negative test cases according to the Test Case Document

• Test plan file: All the test cases defined in the script file are called

Automation scripts also use pre-created Test Files which include:

• Legacy Documents

• Image files of different formats

• Different Font Files

• MS Word, Excel files etc.

Audience

The automated scripts are created for use by the QA team.

Review:

Purpose

To verify that the test cases are automated as per the pass fail criteria specified in the Test Case Document.

Who should review the Automation Scripts?

Automation scripts for each feature in the application are to be reviewed by the primary person assigned for manual testing of that feature.

Automation Script Review Checklist

• Verify that all possible verifications listed in the Pass Fail criteria are done through the script.

• Verify proper exception handling for all automated test cases.

• Verify that there is no redundant code and functions are used wherever necessary.

• Verify that proper error messages are displayed in the event of a test case failure.

To know about Apple Scripting, please visit Apple Scripting

To know about Eggplant, please visit Eggplant

To know about Silk Test, please refer to Silk Test Q&A

To know about WinRunner, please refer to WinRunner Q&A

For more software testing definitions, please go here

Apple Scripting

AppleScript allows to create sets of written instructions, known as scripts, to automate repetitive tasks, customize applications, and control complex workflows.

Application support for AppleScript is provided by R&D team wherever required in the product. The role of QA is to test all those features in the application, which are made Scriptable.

Framework

The commonly used framework for AppleScript is divided into two parts:

• AS suite

It consists of all AppleScripts to provide the test case input and steps in the form of AppleScripts.

• EggPlant suite

It consists of scripts written in Eggplant, which verify the job performed by AS at application’s UI.

To know more about Automation Scripts, please refer to Automation Scripts

For more software testing definitions, please go here

Alpha Certification

Phase: Alpha

Purpose: Present fully functional product to customers for testing.

Entry Criteria

• All Showstoppers and Alpha defects thrashed till specified number of days prior to the publication of the build must be fixed.

• Defects which are dependent on external implementation should have possible fix dates.

• All Defects fixed in the prior build of Alpha candidate should have appropriate action taken on them.

• UI Specification is complete and accepted by PM, QA and R&D.

• Customer Pre-Release Documentations like What to test, Known Issues, New Features is completed.

• Help Files should be launched within the application.

• Configuration Documents are defined and reviewed by stakeholders.

• All test plans reviewed and approved by R&D and PM. Final draft of DIT in progress or sent for review.

• UI implementation should be ready for pixel perfect review.

• All localization changes should have been completed.

• All workflows are defined, reviewed and accepted by stakeholders.

Activities

• Acceptance testing according to following criteria:

• Successful execution of Build Acceptance tests i.e. Smoke test and Manual test.

• Acceptance results shall be published within 1 working day after receiving the Alpha build.

• Regress all OpenForQA bugs fixed for the Accepted Alpha build after publishing the Acceptance test results.

• Regress all Fixed/NLI Showstoppers and Alpha defects.

• Automated Focus Results should not report any new Showstoppers and Alpha defects or result in reopening of the closed ones.

• QA shall execute structured tests on the Alpha candidate build and the result will be updated on shared location.

• Execute all the identified workflows.

Exit Criteria

• The Activities described above have been completed.

• If any Showstopper or Alpha defect is rejected, the build cannot be certified as Alpha. However, for a rejected defect with lower priority, the decision to certify the product shall be that of the Triage team.

For more software testing definitions, please go here

Adhoc Testing

Following are some of the characteristics of Adhoc Testing:

• It involves utilizing strange and random input to determine if the application reacts adversely to it.

• There is no structured testing involved and no test cases are run. It involves various permutations and combinations of different inputs that may affect the functionality of a component.

• It also involves integration of different functionalities which is outside the normal scope of structured testing.

• It goes along simultaneously with structured testing.

• It is done to ascertain that the product is running successfully as a whole under any circumstance.

• It is basically an approach to test the product from user’s perspective.

• It is unscripted, unrehearsed, and improvisational.

For more software testing definitions, please go here

Acceptance Testing

Acceptance cases are categorized as R1 cases, where R1 = (Rotation 1 i.e. test cases to be executed every week when weekly build is published)

These are the critical test cases executed on every Weekly build. All Acceptance test cases would fall in this category.

The following is the acceptance procedure followed by QA:

• QA receives SCM compiled build from R&D.

• QA conducts Acceptance testing on the very day of the publishing of the weekly build by executing all the R1 cases.

• If no R1 cases are failing then the build is declared “Accepted”. QA will now test further on this Accepted build.

• If one or more of the R1 cases are failing then the build is declared “Rejected”. This information is passed on to R&D and PM with the information of the failing test cases and their corresponding defect numbers. QA does not carry on any further testing and continues to work on the older build until a new build has passed the Acceptance criteria.

For more software testing definitions, please go here

Testing definitions

Ad Hoc Testing: Testing carried out using no recognized test case design technique.

Alpha Testing: Testing of a software product or system conducted at the developer’s site by the customer.

Automated Testing: Software testing which is assisted with software technology that does not require operator (tester) input, analysis, or evaluation.

Bug glitch, error, goof, slip, fault, blunder, boner, howler, oversight, botch, delusion, issue, problem.

Beta Testing: Testing conducted at one or more customer sites by the end-user of a delivered software product or system.

Benchmarks: Programs that provide performance comparison for software, hardware, and systems.

Black Box Testing: A testing method where the application under test is viewed as a black box and the internal behavior of the program is completely ignored. Testing occurs based upon the external specifications. Also known as behavioral testing, since only the external behaviors of the program are evaluated and analyzed. For more details about Black Box Testing, please refer to Testing Methodologies

Boundary Value Analysis (BVA): BVA is different from equivalence partitioning in that it focuses on “corner cases” or values that are usually out of range as defined by the specification. This means that if function expects all values in range of negative 100 to positive 1000, test inputs would include negative 101 and positive 1001. BVA attempts to derive the value often used as a technique for stress, load or volume testing. This type of validation is usually performed after positive functional validation has completed (successfully) using requirements specifications and user documentation.

Breadth Test: A test suite that exercises the full scope of a system from a top-down perspective, but does not test any aspect in detail.

Cause Effect Graphing:

• Test data selection technique. The input and output domains are partitioned into classes and analysis is performed to determine which input classes cause which effect. A minimal set of inputs is chosen which will cover the entire effect set.

• A systematic method of generating test cases representing combinations of conditions. See: testing, functional. [G. Myers]

Code Inspection: A manual [formal] testing [error detection] technique where the programmer reads source code, statement by statement, to a group who ask questions analyzing the program logic, analyzing the code with respect to a checklist of historically common programming errors, and analyzing its compliance with coding standards. Contrast with code audit, code review, code walkthrough. This technique can also be applied to other software and configuration items.

Code Walkthrough: A manual testing [error detection] technique where program [source code] logic [structure] is traced manually [mentally] by a group with a small set of test cases, while the state of program variables is manually monitored, to analyze the programmer ’s logic and assumptions.[G.Myers/NBS] Contrast with code audit, code inspection, code review.

Compatibility Testing: The process of determining the ability of two or more systems to exchange information. In a situation where the developed software replaces an already working program, an investigation should be conducted to assess possible comparability problems between the new software and other programs or systems.

Defect: The difference between the functional specification (including user documentation) and actual program text (source code and data). Often reported as problem and stored in defect-tracking and problem-management system

Defect also called a fault or a bug, a defect is an incorrect part of code that is caused by an error. An error of commission causes a defect of wrong or extra code. An error of omission results in a defect of missing code. A defect may cause one or more failures. Please refer the article Defect Logging to know how to log a defect.

Decision Coverage: A test coverage criteria requiring enough test cases such that each decision has a true and false result at least once, and that each statement is executed at least once. Contrast with condition coverage, multiple condition coverage, path coverage, statement coverage.

Dirty testing is also called Negative testing.

Dynamic testing: Testing, based on specific test cases, by execution of the test object or running programs.

Equivalence Partitioning: An approach where classes of inputs are categorized for product or function validation. This usually does not include combinations of input, but rather a single state value based by class. For example, with a given function there may be several classes of input that may be used for positive testing. If function expects an integer and receives an integer as input, this would be considered as positive test assertion. On the other hand, if a character or any other input class other than integer is provided, this would be considered a negative test assertion or condition.

Error An error is a mistake of commission or omission that a person makes. An error causes a defect. In software development one error may cause one or more defects in requirements, designs, programs, or tests.

Error Guessing: Another common approach to black-box validation. Black-box testing is when everything else other than the source code may be used for testing. This is the most common approach to testing. Error guessing is when random inputs or conditions are used for testing. Random in this case includes a value either produced by a computerized random number generator, or an ad hoc value or test conditions provided by engineer.

Exception Testing: Identify error messages and exception handling processes a conditions that trigger them.

Exhaustive Testing(NBS): Executing the program with all possible combinations of values for program variables. Feasible only for small, simple programs.

Functional Testing: Application of test data derived from the specified functional requirements without regard to the final program structure. Also known as black-box testing.

Gray Box Testing: Tests involving inputs and outputs, but test design is educated by information about the code or the program operation of a kind that would normally be out of scope of view of the tester.

High-level tests: These tests involve testing whole, complete products.

Inspection: A formal evaluation technique in which software requirements, design, or code are examined in detail by person or group other than the author to detect faults, violations of development standards, and other problems [IEEE94]. A quality improvement process for written material that consists of two dominant components: product (document) improvement and process improvement (document production and inspection).

Integration: The process of combining software components or hardware components or both into overall system.

Integration testing: Testing of combined parts of an application to determine if they function together correctly. The ‘parts’ can be code modules, individual applications, client and server applications on a network, etc. This type of testing is especially relevant to client/server and distributed systems. For more details about Integration Testing, please refer to Testing Methodologies

Load testing: Load testing verifies that a large number of concurrent clients does not break the server or client software. For example, load testing discovers deadlocks and problem with queues.

Quality Assurance(QA): Software QA involves the entire software development PROCESS - monitoring and improving the process, making sure that any agreed-upon standards and procedures are followed, and ensuring that problems are found and dealt with. It is oriented to 'prevention'.

A set of activities designed to ensure that the development and/or maintanence processes are adequate to ensure a system will its meet requirements/objectives. QA is interested in processes.

Re- test: Retesting means we testing only the certain part of an application again and not considering how it will effect in the other part or in the whole application.

Regression Testing: Testing the application after a change in a module or part of the application for testing that is the code change will affect rest of the application.

Software testing is the process used to help identify the Correctness, Completeness, Security and Quality of the developed Computer Software. Software Testing is the process of executing a program or system with the intent of finding errors.

Test Bed: Test Bed is an execution environment configured for software testing. It consists of specific hardware, network topology, Operating System, configuration of the product to be under test, system software and other applications. The Test Plan for a project should be developed from the test beds to be used.

UAT testing - UAT stands for 'User acceptance Testing. This testing is carried out with the user perspective and it is usually done before the release.

Walkthrough: A 'walkthrough' is an informal meeting for evaluation or informational purposes. Little or no preparation is usually required.

WinRunner Questions and Answers

C Like

2. What are the modes of recording tests?

a) Context-Sensitive: Object mapping saved in GUI map so change is required there only

b) Analog mode: Analog mode records mouse clicks, keyboard input, and the exact x- and y- coordinates traveled by the mouse. Switch over from Context Sensitive to analog and vice versa can be done with F2.

3. What are the modes of running tests?

Use Verify mode when running a test to check the behavior of your application, and when you want to save the test results.

Use Debug mode when you want to check that the test script runs smoothly without errors in syntax.

Use Update mode when you want to create new expected results for a GUI

4. What are the modes for organizing GUI Map files?

a) Global GUI Map File mode: You can create a GUI map file for your entire application, or for each window in your application. Different tests can reference a common GUI map file

b) GUI Map File per Test mode: Win Runner automatically creates a GUI map file that corresponds to each test you create.

5. What is the testing Process in Win Runner?

Create GUI Map:

RapidTest Script wizard, Alternatively, you can add descriptions of individual objects to the GUI map by clicking objects while recording a test.

Create Test

Debug Test

One can set breakpoints, monitor variables, and control how tests are run

Run Test

One runs tests in Verify mode to test your application.

View Result

One can view the expected results and the actual results from the Test Results window. In cases of bitmap mismatches, one can also view a bitmap that displays only the difference between the expected and actual results.

6. What are logical name and physical description of an object?

The logical name is actually a nickname for the object’s physical description. The physical description contains a list of the object’s physical properties. The logical name and the physical description together ensure that each GUI object has its own unique identification.

7. What are logical name and physical description of an object?

The logical name is actually a nickname for the object’s physical description. The physical description contains a list of the object’s physical properties. The logical name and the physical description together ensure that each GUI object has its own unique identification.

8. What set_window command does?

If one programs a test manually, you need to enter the set_ window statement when the active window changes.

9. What are the functions of GUI Map Editor and GUI spy?

GUI Spy to view the properties of any GUI object on your desktop, to see how WinRunner identifies it.

By using the GUI Map Editor to learn the properties of an individual GUI object, window, or all GUI objects in a window.

One can modify the set of properties that WinRunner learns for a specific object class using the GUI Map Configuration dialog box.

You must load the appropriate GUI map files before you run tests. WinRunner uses these files to help locate the objects in the application being tested. It is most efficient to insert a GUI_ load statement into your startup test.

10. How object descriptions are edited in GUI map?

You must edit the label in the button’s physical description in the GUI map. You can change the physical description using regular expressions.

11. What type of test cases Win Runner writes automatically when running Rapid Test Script wizard?

GUI Regression Test

Bitmap Regression Test

User Interface Test

Test Template: This test provides a basic framework of an automated test that navigates your application. It opens and closes each window, leaving space for you to add code (through recording or programming) that checks the window.

12. Can there be more than one Global GUI map files for one application?

Yes, but that needs to be loaded programmatically. Sometimes the logical name of an object is not descriptive. If you use the GUI Map Editor to learn your application before you record, then you can modify the logical name of the object in the GUI map to a descriptive name by highlighting the object and clicking the Modify button.

GUI Map files can also be merged. Both Auto and Manual Merge are there and conflict resolution for same window name and object name can be used while merging.

13. What are two regular expressions?

A regular expression is a string that specifies a complex search phrase in order to enable WinRunner to identify objects with varying names or titles.

In the Physical Description label line, add an “!” immediately following the opening quotes to indicate that this is a regular expression.

The regexp_ label property is used for windows only. It operates “behind the scenes” to insert a regular expression into a window’s label description.

The regexp_ MSW_ class property inserts a regular expression into an object’s MSW_ class. It is obligatory for all types of windows and for the object class object.

14. How objects are identified in WinRunner?

Each GUI object in the application being tested is defined by multiple properties, such as class, label, MSW_ class, MSW_ id, x (coordinate), y (coordinate), width, and height. WinRunner uses these properties to identify GUI objects in your application during Context Sensitive testing.

15. What is generic object class?

When WinRunner records an operation on a custom object, it generates obj_ mouse_ statements in the test script. If a custom object is similar to a standard object, you can map it to one of the standard classes. You can also configure the properties WinRunner uses to identify a custom object during Context Sensitive testing. The mapping and the configuration you set are valid only for the current WinRunner session. To make the mapping and the configuration permanent, you must add configuration statements to your startup test script.

16. What is Virtual Object?

By defining a bitmap as a virtual object, you can instruct WinRunner thru Virtual Object Wizard to treat it like a GUI object such as a push button, when you record and run tests.This makes your test scripts easier to read and understand.

17. What is Synchronization Point?

Synchronization points solve timing and window location problems that may occur during a test run. A synchronization point tells WinRunner to pause the test run in order to wait for a specified response in the application.

18. When should Synchronization be done?

to retrieve information from a database

for a window to pop up

for a progress bar to reach 100%

for a status message to appear

19. How should Synchronization be done?

General: Increase the default time that WinRunner waits. To do so, you change the value of the Timeout for Checkpoints and CS Statements option in the Run tab of the General Options dialog box (Settings > General Options).

In Script: Choose Create > Synchronization Point > For Object/ Window Bitmap or click the Synchronization Point for Object/ Window Bitmap button on the User toolbar. A synchronization point appears as obj_ wait_ bitmap or win_ wait_ bitmap statements in the test script.

20. What are the statements for checking GUI objects?

obj_ check_ gui statement into the test script if you are checking an object, or a win_ check_ gui statement if you are checking a window.

Choose Settings > General Options . In the General Options dialog box, click the Run tab, and clear the Break when verification fails check box. This enables the test to run without interruption.

21. How does one check bitmaps?

WinRunner captures a bitmap image and saves it as expected results. It then inserts an obj_check_bitmap statement into the test script if it captures an object, or a win_ check_ bitmap statement if it captures an area or window.

22. How is function generator used?

Choose Create > Insert Function > For Object/ Window or click the Insert Function for Object/ Window button on the User toolbar. Use the pointer to click the # field.

The Function Generator opens and suggests the edit_ get_ text function.

This function reads the text in the # field and assigns it to a variable. The default variable name is text. Change the variable name, text, to anytext of your choice by typing in the field. Edit > Comment . After the # sign.

23. How Logics for correctness can be written in scripts?

By adding tl_ step statements to your test script, you can determine whether a particular operation within the test passed or failed, and send a message to the report.

if (tickets* price == total)

tl_ step (" total", 0, "Total is correct.");

else

tl_ step (" total", 1, "Total is incorrect.");

24. What debugging tools are used in Win Runner?

Run the test line by line using the Step commands

Define breakpoints that enable you to stop running the test at a specified line or function in the test script

Monitor the values of variables and expressions using the Watch List

25. What are the statements used to write data driven tests?

The table = line defines the table variable.

The ddt_ open statement opens the table, and the subsequent lines confirm that the data- driven test opens successfully.

The ddt_ get_ row_ count statement checks how many rows are in the table, and therefore, how many iterations of the parameterized section of the test to perform.

The for statement sets up the iteration loop.

The ddt_ set_ row statement tells the test which row of the table to use on each iteration.

In the edit_ set statement, the value, “ aaa ” is replaced with a ddt_ val statement.

The ddt_ close statement closes the table.

26. How batch tests are run in Win Runner?

You choose the Run in batch mode option on the Run tab of the General Options dialog box ( Settings > General Options ) before running the test. This option instructs WinRunner to suppress messages that would otherwise interrupt the test.

for (i= 0; i<> Activate Exception Handling

Define Handler Function

WinRunner enables you to handle the following types of exceptions:

Pop-up exceptions: Instruct WinRunner to detect and handle the appearance of a specific window.

TSL exceptions: Instruct WinRunner to detect and handle TSL functions that return a specific error code.

Object exceptions: Instruct WinRunner to detect and handle a change in a property for a specific GUI object.

Web exceptions: When the WebTest add-in is loaded, you can instruct WinRunner to handle unexpected events and errors that occur in your Web site during a test run.

For more software testing definitions, please go here

Silk Test Questions and Answers

a) Regression Bugs : As the functionality is already present in the software

b) Repetitive Test Cases : Similar Type of test cases which if one is automated leads to easier automation of other test cases

c) Data Driven : When repetitive type of Data inputs are required for test cases

d) Easy to automate : Test Cases which are easy to automate thru Silk/WinRunner

e) Data base testing: Which needs input from database or takes output from database for validation.

f) Acceptance Tests of Builds

2. Why Functional Automation?

Automation is done to reduce repeated and redundant testing. Automation maintains a single standard of quality for your application over time — across releases, platforms, and networks.

3. Steps to Automate through Silk?

•Creating a test plan

•Recording a test frame

•Creating Test cases

•Running Test cases and interpreting their results

4. Why Silk?

Silk is an object-oriented fourth-generation language (4GL) using 4Test language. Silk is used for testing GUI applications, C/S applications, web applications and web browsers.

5. What Host and agent do?

SilkTest host software

•The SilkTest host software is the program you use to develop, edit, compile, run, and debug your Silk scripts and test plans.

The Agent

•The 4Test Agent is the software process that translates the commands in Silk scripts into GUI-specific commands.

6. What Type of Data Types Silk Uses?

•Built-in Data Types

ANYTYPE

A variable of type ANYTYPE stores data of any type, including user-defined types.

BOOLEAN

A variable of type BOOLEAN stores either TRUE or FALSE.

DATACLASS

The legal values of the DATACLASS type are the names of all the 4Test classes, including user-defined classes

•C Data types

Char•Int•Short•Long•unsigned char•unsigned int•unsigned short•unsigned long•Float•double

•User Defined Data Types

example: type FILE is LIST OF STRING

type COLOR is enum

red

green

7. What are Various Types of 'Built in Functions' in Silk?

•Application state

•Array manipulation

•Char/string conversion

•Data type manipulation

8. What are Various Types of Files in Silk?

•Test Frames

•Include Files

•Test Scripts

•Test Plans

•Suite Files

•Option Set

•Result File

9. How Window declarations are useful?

•Declarations specify logical names

•Declarations can encapsulate data and functions

10. What are the stages of recording Window declaration?

Two stages for Recording window Declaration:

•Record the window declarations for the main window (including its menus and controls).

•Bring up each dialog one at a time and record a declaration for each.

11. What are the stages of recording Window declaration?

Two stages for Recording window Declaration:

•Record the window declarations for the main window (including its menus and controls).

•Bring up each dialog one at a time and record a declaration for each.

•Every window declaration consists of a class, identifier, and one or more tags. The class cannot be changed

•The window declaration maps the object’s logical, platform-independent name, called the identifier, to the object’s actual name, called the tag. Identifier can be changed.

12. What are the various types of Tags?

•Caption

•Prior text: Prior text tags begin with the ^ character.

•Index: Index tags begin with the # character.

•Window ID: Window ID tags begin with the $ character.

•Location: Location tags begin with the @ character.

13. What Multitags used for?

This is particularly an issue in situations where captions change dynamically, such as in MDI applications where the window title changes each time a different child window is made active. When running test cases, The Agent tries to resolve each part of a multiple tag from top to bottom until it finds an object that matches.

14. How Errors are handled in Silk?

a) Default Error Handling

If a test case fails (for example, if the expected value doesn’t match the actual value in a verification statement), Silk Test by default calls its built-in recovery system, which:

- Terminates the test case

- Logs the error in the results file

- Restores your application to its default base state in preparation for the next test case. These runtime errors are called exceptions. They indicate that something did not go as expected in a script. They can be generated automatically by Silk Test, such as when verification fails, when there is a division by zero in a script, or when an invalid function is called.

b) Explicit Error Handling

However, suppose you don’t want Silk Test to transfer control to the recovery system when an exception is generated, but instead want to trap the exception and handle it yourself. To do this, you use the 4Test do...except statement.

c) Programmatically logging an error

•Some of the test cases passed, even though an error occurred, because they used their own error handler and did not specify to log the error. If you want to handle errors locally and generate an error (that is, log an error in the results file), you can do any of the following:

- After you have handled the error, reraise it using the reraise statement and let the default recovery system handle it

- Call any of the following functions in your script:

Function Action

•LogError (string) Writes string to the results file as an error (displays in red or italics, depending on platform) and increments the error counter. This function is called automatically if you don’t handle the error yourself.

•LogWarning (string) same as LogError, except it logs a warning, not an error.

•ExceptLog ( ) Calls LogError with the data from the most recent exception.

15. What are Custom Objects and how are they useful?

•Silk designates an object as custom if it is not an instance of a built-in class.

•Custom classes enable an application to:

- perform functions specific to the application

- enhance standard class functionality

- be maintained and extended easily by the developers

•All custom objects default to the built-in class CustomWin

16. How Custom Objects can be used?

•Mapping to Known Classes

•Procedure to Class Map a Custom Object

1. Select Record / Window Declarations

2. Place the cursor over the custom object

3. Press Ctrl-Alt. The Record Window Declaration dialog appears

4. Click Class Map. The Class Map dialog appears

5. Select the corresponding standard class

6. Click Add

7. Click OK

8. Click Resume Tracking.

•Adding User-Defined Methods:

Functions specific to a window are called methods. A method is a series of commands that perform a task.

- Methods can have data passed to them

- Methods can return a value

•To create a method specific to a window, define the function within the declaration of the window. The function automatically becomes a member of that window, or a member function.

•Methods Available to an Entire Class

Some methods may be applicable for any instance of a class, not just for a particular object

•Normal function syntax applies:

- Scope is local (to the class)

- May return or take parameters

•Use the following structure to define a new class:

winclass new_sub_class_name : class_name

•Derive your new class from what is the logical parent class of the custom class.

•To add the new method for a class, place it within this class definition.

•Since you are defining your methods at the class level, there may be multiple instances of the class in your application. When referring to the object within your method, instead of ‘hard coding’ a particular instance refer to it generically with the keyword this.

•‘this’ refers to the specific instance (object) that the method will operate against at runtime. It is an object oriented technique that may be used when defining methods within a window class declaration.

•Steps to implement:

- Derive a new class for each custom class

- Add methods to operate on instances of the class

- Declare objects (or modify existing declarations) of the custom class to be instances of your new defined class.

• Calling DLL functions

•A DLL (Dynamic Link Library) is a binary file containing functions. An application is dynamically linked to this file at run time:

- Enabling the application executable to be smaller

-Allowing multiple applications to share the same set of functions from a single source

•You can access DLL functions, which are either:

- Standard platform API calls

-Application specific functions

•These DLL functions are found in several files, including:

- Kernel32.dll

- Gdi32.dll

User32.dll

How to call Dll functions?

•Declare the name of the dll where the function is found

•Declare the name of the function, its return type (if any) and its parameter list (if any)

•Declare any specific data types used by the function

•Declare any constants used by the function

•Call the function from within a SilkTest script.

dll “user32.dll”

INT MessageBox (HWND hWnd, LPCSTR sText, LPCSTR sTitle, UINT uFlags)

Passing arguments to DLL functions

•Calling DLL functions written in C requires you to use the appropriate C data types:

- If the function uses C type BOOL, substitute type INT

- Pass string variable (STRING) for a pointer to a character(CHAR*)

- Pass array or list of the appropriate type for a pointer to a numerical array

-Pass a Silk record for a pointer to a record

•Many applications store part of their functionality in one or more dynamic libraries.

•Declare the DLL normally, but use the inprocess keyword before the function declaration in the DLL you are calling.

dll “C:\MyPrograms\circle.dll”

Inprocess INT GetRadius(HWND hWnd)

17. Why do we use appstates in Silk?

a) An application state is typically used to put an application into the state it should be in at the start of a test case.

Example:

appstate MyAppState () basedon MyBaseState

// Code to bring the application to the proper state...

appstate MyBaseState ()

// Code to bring the application to the base state...

18. Which silk function opens the desired file on the host system? Give example

FileOpen function

Example Syntax :-

// output file handle

HFILE OutputFileHandle

FILESHARE fShare

// now open the file

OutputFileHandle = FileOpen ("mydata.txt", FM_WRITE, fShare)

19. Which function in Silk would you use to connect to database and give its syntax/example?

DB_Connect function

Example: -

Hdatabase hdbc

hdbc = DB_Connect ("DSN=QESS;SRVR=PIONI;UID=sa;PWD=tester")

20. Which function in Silk would you use to query database and give its syntax/example?

DB_ExecuteSql function

Example: -

hstmnt = DB_ExecuteSql (hdbc, "SELECT * FROM emp")

21. How would you ensure exception handling in Silk test script?

Using “Do…. Except” statement. The do...except statement allows a possible exception to be handled by the test case instead of automatically terminating the test case. It Handles (ignores) an exception without halting a script.

22. What is the use of “Spawn” and “Rendezvous” in silk test?

a) Spawn -: The purpose of the spawn is to initiate Concurrent operation on Multiple Machines.

b) Rendezvous -: Blocks execution of the calling thread until all threads that were spawned by the calling thread have completed.

23. What is DefaultBasestate and why do we use it?

Silk Test provides a DefaultBaseState for applications, which ensures the following conditions are met before recording and executing a test case:

i. The application is running

ii. The application is not minimized

iii. The application is the active application

iv. No windows other than the application’s main window are open

Example: -

Here is a sample application state that performs the setup for all forward case-sensitive searches in the Find dialog:

appstate Setup () basedon DefaultBaseState

TextEditor.File.New.Pick ()

DocumentWindow.Document.TypeKeys ("Test Case

TextEditor.Search.Find.Pick ()

Find.CaseSensitive.Check ()

Find.Direction.Select ("Down")

24. When do we use Appstate none?

a) When we do not want to have our test case any base state.

b) If your test case is based on an application state of none or a chain of application states ultimately based on none, all functions within the recovery system are not called. For example, SetAppState and SetBaseState are not called, while DefaultTestCaseEnter, DefaultTestCaseExit, and error handling are called.

25. What do the following commands do in the Debugging techniques: -

a) Step Into command: use Step Into to step through the function one line at a time, executing each line in turn as you go.

b) Step Over command: use Step Over to speed up debugging if you know a particular function is bug-free.

c) Finish Function command: use Finish Function to execute the script until the current function returns. SilkTest sets the focus at the line where the function returns. Try using Finish Function in combination with Step Into to step into a function and then run it.

26. What Would you do when you get the following error while executing your script Error: Window 'name' is not enabled

There are 2 ways to achieve this –

a) To turn off the verification globally, uncheck the Verify that windows are enabled option on the Verification tab in the Agent Options dialog (select Options/Agent).

b) Turn off the option in your script on a case by case basis, add the following statement to the script, just before the line causing the error: Agent.SetOption(OPT_VERIFY_ENABLED, FALSE)

Add the following line just after the line causing the error: Agent.SetOption(OPT_VERIFY_ENABLED, TRUE).This means SilkTest will execute the action regardless of whether the window is enabled.

27. How custom objects are mapped in Silk?

There are two ways to map custom objects to standard classes

a) While recording Window declaration Custom Win class can be mapped to Standard class. There is a button called Class Map on the declaration window. On clicking this button Class Map Window appears where you can physically map.

b) In scripts while writing you can go to Menu Options and select Class Map

Testing Methodologies

Testing: The Art of Destruction. An integral part of Quality Assurance.

Productive QA:

Analytical QA: Compromises activities which ascertain its level of Quality. Software Testing comes under Analytical QA.

Software Testing:

1. Testing involves operation of a system or application under controlled conditions and evaluating the results.

2. Every Test consists of 3 steps :-

Planning : Inputs to be given, results to be obtained and the process to proceed is to planned.

Execution : preparing test environment, Completing the test, and determining test results.

Evaluation : compare the actual test outcome with what the correct outcome should have been.

‘Pareto principle’ - says that 80% of the errors are concentrated at 20% of the code only – forms a good criteria for testing.

Classifications in Testing:

Various methodologies of testing exist which can best be classified into 2 major categories:

Testing Methods:

Black Box Testing:

Comparison Testing

Graph Based Testing

Boundary Value Testing

Equivalence class Testing

Gray Box Testing:

Similar to Black box but the test cases, risk assessments, and test methods involved in gray box testing are developed based on the knowledge of the internal data and flow structures.

White Box Testing:

Mutation Testing

Basic Path Testing

Control Structure Testing

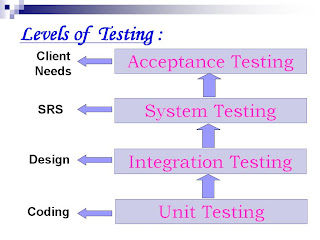

Testing Levels:

Unit Testing

Integration Testing

System Testing

Black Box Testing:

Also called ‘Functional Testing’ as it concentrates on testing of the functionality rather than the internal details of code.

Test cases are designed based on the task descriptions.

Equivalence Class Testing: Test inputs are classified into Equivalence classes such that one input check validates all the input values in that class.

Boundary Value Testing: Boundary values of the Equivalence classes are considered and tested as they generally fail in Equivalence class testing.

Comparison Testing: Test cases results are compared with the features/results of other products e.g. competitor product.

Graph based Testing: Cause and effect graphs are generated and cyclometric complexity considered in using the test cases.

White Box Testing:

Also called ‘Structural Testing / Glass Box Testing’ is used for testing the code keeping the system specs in mind.

Inner working is considered and thus Developers Test.

Mutation Testing: Number of mutants of the same program created with minor changes and none of their result should coincide with that of the result of the original program given same test case.

Basic Path Testing: Testing is done based on Flow graph notation, uses Cyclometric complexity & Graph matrices.

Control Structure Testing: The Flow of control execution path is considered for testing. It does also checks:-

Conditional Testing: Branch Testing, Domain Testing.

Data Flow Testing.

Loop testing: Simple, Nested, Conditional, and Unstructured Loops

Unit Testing:

Unit Testing is primarily carried out by the developers themselves.

Deals functional correctness and the completeness of individual program units.

White box testing methods are employed.

Integration Testing:

Deals with testing when several program units are integrated.

Regression testing: Change of behavior due to modification or addition is called ‘Regression’. Used to bring changes from worst to least.

Incremental Integration Testing: Checks out for bugs which encounter when a module has been integrated to the existing.

Smoke Testing: It is the battery of test which checks the basic functionality of program. If fails then the program is not sent for further testing.

System Testing:

Deals with testing the whole program system for its intended purpose.

Recovery testing: System is forced to fail and is checked out how well the system recovers the failure.

Security Testing: Checks the capability of system to defend itself from hostile attack on programs and data.

Load & Stress Testing: The system is tested for max load and extreme stress points are figured out.

Performance Testing: Used to determine the processing speed.

Installation Testing: Installation & uninstallation is checked out in the target platform.

Acceptance Testing:

UAT ensures that the project satisfies the customer requirements.

Alpha Testing: It is the test done by the client at the developer’s site.

Beta Testing: This is the test done by the end-users at the client’s site.

Long Term Testing: Checks out for faults occurrence in a long term usage of the product.

Compatibility Testing: Testing to ensure compatibility of an application or Web site with different browsers,

For more software testing definitions, please go here